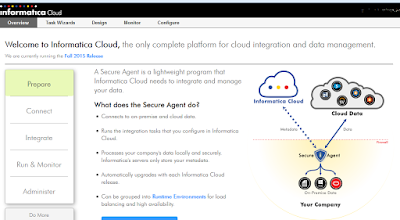

What is Informatica cloud services ?

Informatica cloud service is an Informatica product that is web based and on the cloud solution for data integration. The mappings and any data integration tasks can be created on the web based tool and executed/monitored from the web based tool. A secure agent is installed on the local environment that allows reading/writing data to/from the cloud. Connections can be created to database such as amazon redshift, sql server, oracle, netezza etc and network folders. This product allows ETL tasks to be created on the web without having a power center server location on your premise. Hence, providing a cheaper option for creating Informatica tasks.

The different components of the Informatica cloud service (ICS) tool sets are listed below:

.

Data synchronization and Data replication:

As the name implies it allows database synchronization. Data is copied from the source to the targets along with application of any data filters. One table or multiple tables can be synchronized. ICS provides inbuilt connectors using which connections to the source and target tables can be created.

Mapping configuration:

Allows a task to be created from a mappings. The parameters files, variables, post processing commands, sessions settings etc can be defined for the task. This is similar to the session in the informatica power center.

Power Center:

This component allows Informatica power center workflows to be imported and executed from the Informatica cloud service. The source and target connections from the power center workflow can be mapped to the connections available on Informatica cloud service. When importing informatica power center workflows make sure the workflow is exported from the repository manager of power center. Otherwise it shows some error while importing the task.

Mappings

This component allows to create informatica ETL mappings similar to Informatica power center. Not all the features of Informatica power center is available in Informatica cloud service. Features like SQL transformation, union, etc are not provided in ICS. If you need additional features then you might have to create a mapplet in power center and import that task as a mapplet from powercenter and add it to the ICS mapping. ICS provides transformations such as expression, joiner, filter, lookup, sorter, aggregator, mapplet, and normalizer.

Task Flows

Task flows allows to create a sequence of jobs to be executed. All the mapping configuration tasks created can be made to execute in a sequence. This is similar to the workflow in informatica power center.

Integration templates

This component allows Informatica mapping templates created using Microsoft visio to be imported and applied to mappings in ICS.

Activity Log/Monitor

This component allows to monitor all the executing tasks and as well see the completed tasks. It provides information on the number of source and target rows, session logs, etc.

Mapplets

ICS mapplets work similar to Informatica power center mapplets. Mostly this allows powercenter mapplets to be imported to ICS.

Connections

This components allows connections to be created to flat files folders, databases, etc. Wide range of connectors are available.