Importing Cobol copy book definition in Informatica and troubleshooting cobol file issues

To read a cobol file, first you need to create the source definition and then read the file the data from the cobol input file. Source definitions are created from cobol copy book definitions. Cobol copy book has definition of all the fields present in the data file along with the level and occurence information.How to import a cobol copy book in Informatica?

Create source definition using Source-> Importing From Cobol file option to create the source definition from cobol copy book.

Insert the source definition into mapping. Instead of source qualifier it is going to create normalizer transformation.Read from the normalizer and write to any target.

Details on how to load data from cobol copy book is in this video that I found on youtube:

https://www.youtube.com/watch?v=WeACh8AWQv8

Troubleshooting copy book import in informatica:

Reading from cobol file is not always straightforward. You will have to sometimes edit the copy book to get it working. Some of the messages I had while creating source definitions are below:Error messages seen:

05 Error at line 176 : parse error

Error: FD FILE-ONE ignored - invalid or incomplete.

05 Error: Record not starting with 01 level at line 7

Resolution for copy book import issues In Informatica:

1) Add the headers and footers shown in blue below even if they are blankidentification division.

program-id. mead.

environment division.

select file-one assign to "fname".

data division.

file section.

fd FILE-ONE.

01 HOSPITAL-REC.

02 DEPT-ID PIC 9(15).

02 DEPT-NM PIC X(25).

02 DEPT-REC OCCURS 2 TIMES.

03 AREA-ID PIC 9(5).

03 AREA-NM PIC X(25).

03 FLOOR-REC OCCURS 5 TIMES.

04 FLOOR-ID PIC 9(15).

04 FLOOR-NM PIC X(25).

working-storage section.

procedure division.

stop run.

2) All the fields should start from column 8. Create 8 spaces before each field.

3) Make sure the field names do not start with *. It has to have a level number at the beginning.

4) Level 01 has to be defined as the first record. If level 01 not there then it would not import the cbl file.

5) Special levels such as 88 , 66 might not get imported. This apparently is limitation of powercenter. I could not find a way around it.

Resolution for cobol file issues In Informatica:

I had such a hard time reading a cobol file that was in EBCDIC format with comp-3 fields in it. I thought the issues are mostly in importing the cobol copy book but even more issues when reading the cobol file. It is supposed to be straight forward in Informatica powercenter. The list of issues I faced.

1) When reading from cobol mainfram file the comp-3 fields were not read properly.

2) The alignments of the fields were not correct. The values were moving to next columns and had a cascading effect.

To resolve the above two issues I asked the mainframe file to be sent in binary format. Apparently files ftp'ed in ascii format from mainframe causes all the above issue. The comp-3 fields are not transferred properly in Ascii mode of ftp.

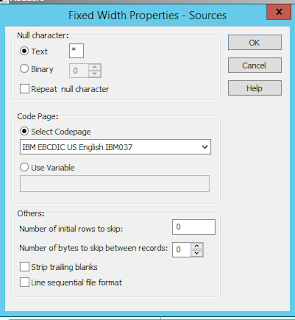

Now with the binary file and below properties shown in screen it was supposed to work correctly. It was not. It was all garbage because of a weird issue. The properties I set for code page in source properties in source designer was for some reason not getting reflected in the session properties for that source. I had to manually change in the session- mapping -> source- file properties -advanced tab to code page IBM EBCDIC US English IBM037 and Number of Bytes to skip between records to 0 and it finally worked.

To summarize when reading cobol file:

a) Ask for the file to be ftp'ed in binary format from the mainframe.

2) Set the code page and number of bytes to skip appropriately as shown in the screenshot in the session properties.

This should solve most of your problems with comp-3 fields and alignments ,etc.

If not try unchecking IBM comp-3 option in the source properties from source designer. Try changing the code page and number of bytes to skip, etc and hopefully it works.

If you are seeing dots at the end of some comp-3 fields then most likely you are writing numeric data into varchar field. If you write it to numeric fields in the database those dots should disappear.

Another issue with Informatica power center is that it can read only fixed width files. if you have occurs section with depending on condition then most likely you have a variable length file and Informatica power center does not work correctly with variable length files. Ask the mainframe guys to convert it to fixed width files.